Optimization

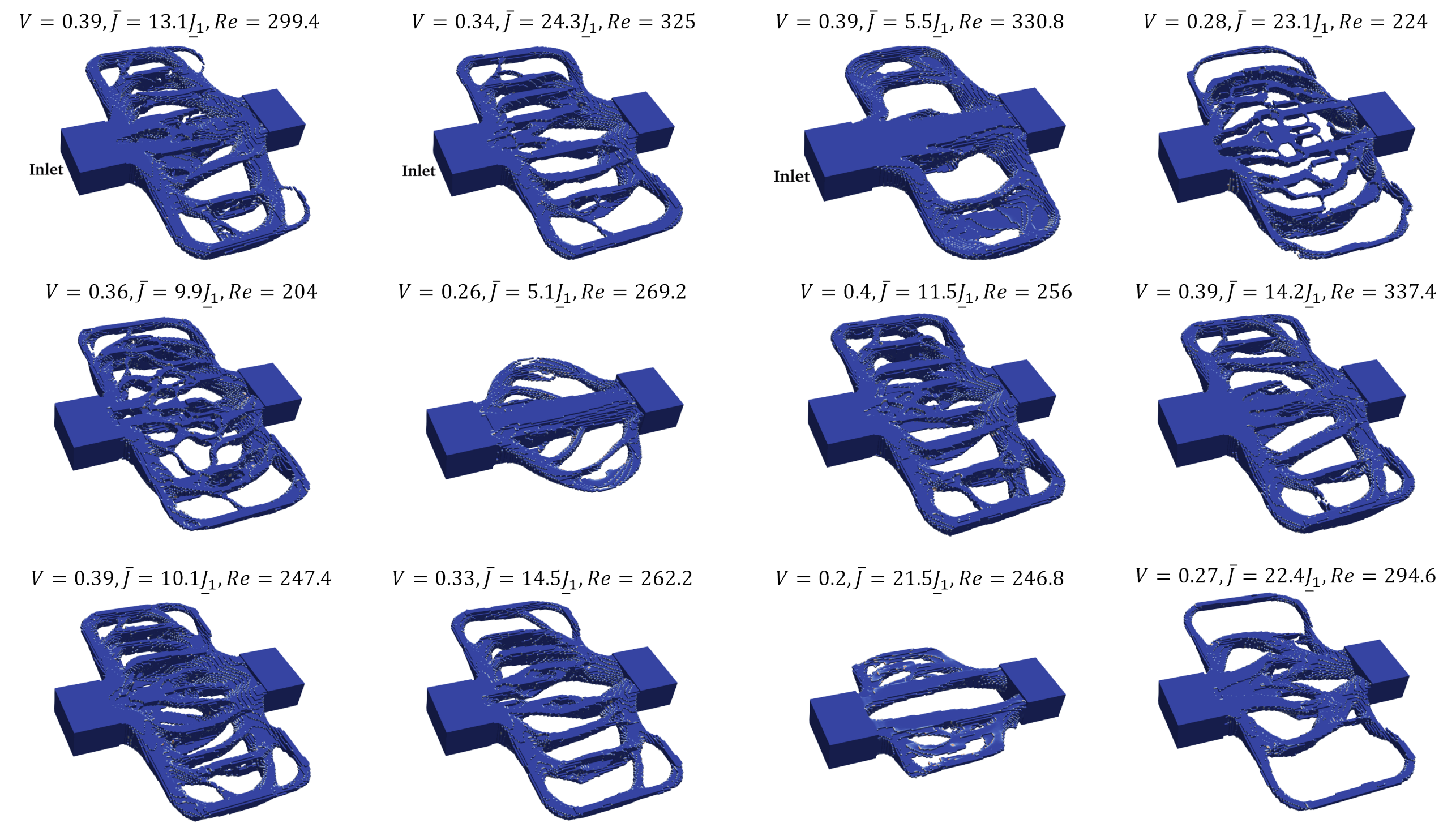

We study methods for how to accelerate both gradient-based and non-gradient-based optimization methods. For gradient-based approaches, this includes mainly warm-starting optimizers with ML-derived initial guesses — we refer to this as "warm-starting" the optimizer — which can often speed up gradient based methods external page from 2-4 times or in some cases external page an order of magnitude.

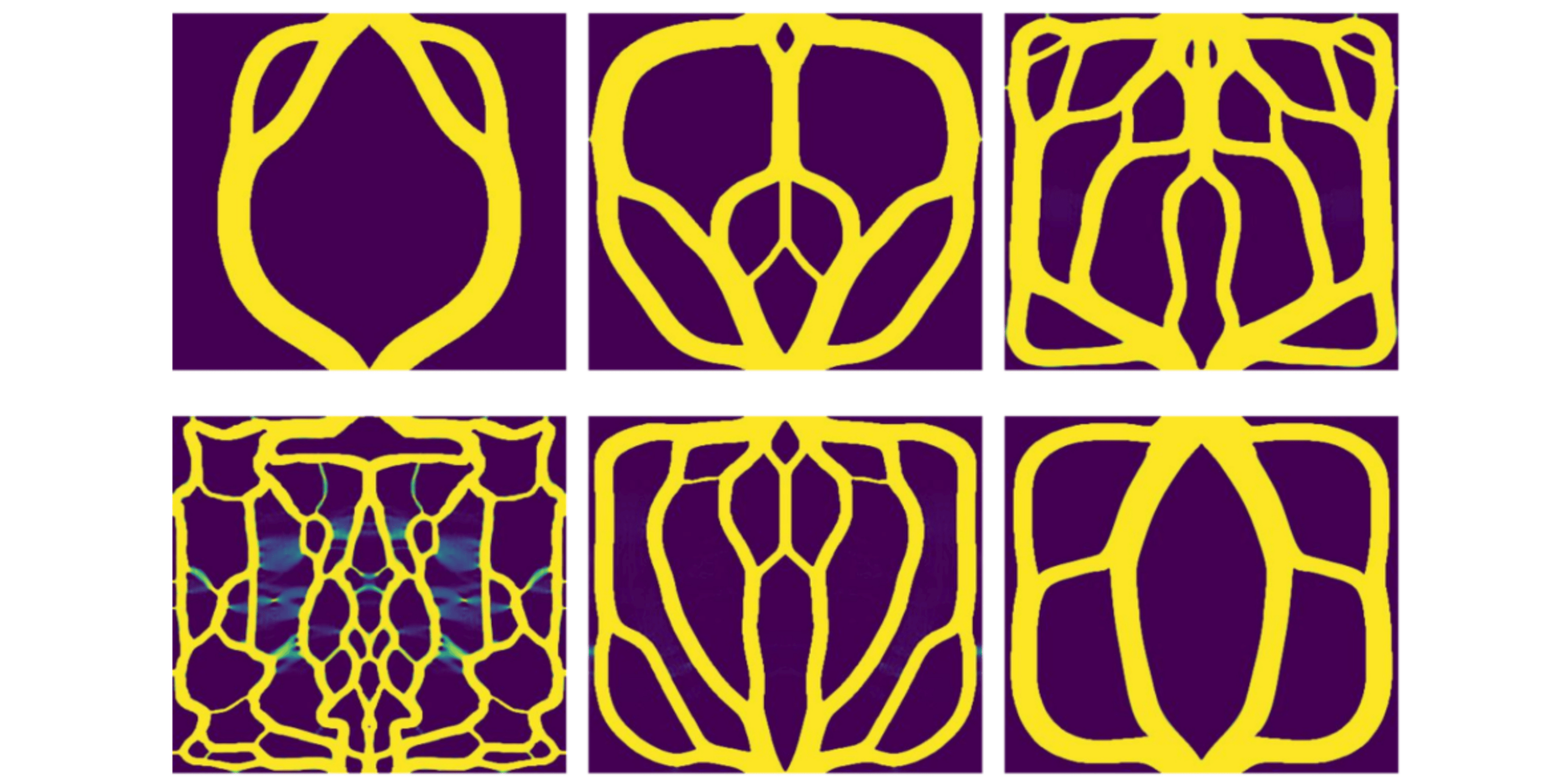

In addition, we investigate non-gradient-based optimization strategies, and specifically the use of Bayesian Optimization. On the theory side, we have studied how external page intializing Bayesian Optimizers can have counter-intuitive effects on convergence performance as well as how to perform external page Bayesian Optimization on unbounded domains using Active Expansion Sampling. On the applications side, we have mostly applied these to applications in Medical Devices.